Recently, our CTO Chris introduced the concept of just-in-time data modeling. This approach — building your data model during or after analysis, rather than modeling upfront — lets you get insights fast while distilling reusable definitions into your organization.

This workflow has been lacking in BI for too long, so we built Omni to unlock it. With just-in-time data modeling, anyone can run fast, easy analysis without comprising trust or governance.

When we started talking to customers about just-in-time data modeling, they found the concept intuitive, but it really clicked once they saw it in action. So, in this blog, I’ll walk you through a handful of examples of how just-in-time data modeling improves upon traditional data modeling:

Running a quick user cohort analysis

Diagnosing a fast-changing metric

Measuring the impact of a new sales channel

Exploring a new dataset for the first time

Example 1: Running a quick user cohort analysis #

If the data isn’t complex, you shouldn’t be a bottleneck for your team because of a rigid semantic layer. Get to those insights first, and if you find something you want to save and reuse, add it to your model later. For example:

It’s common to define user cohorts based on users’ first actions (e.g. users who placed their first orders in January, February, etc.). Usually, this requires creating a fact table and then joining this table back into the rest of your data. It’s a simple process, but it can feel heavy to drop down to your data model just to create a simple table and define that extra join.

Instead, in Omni, you can (1) save any query as a new view and (2) automatically join that query into your existing data, letting you quickly calculate cohort metrics (think “days since first order” or “total sales in first week as a customer”). That way, you can get to key insights quicker – and decide later if this is worth hardening down to your database layer or shared model.

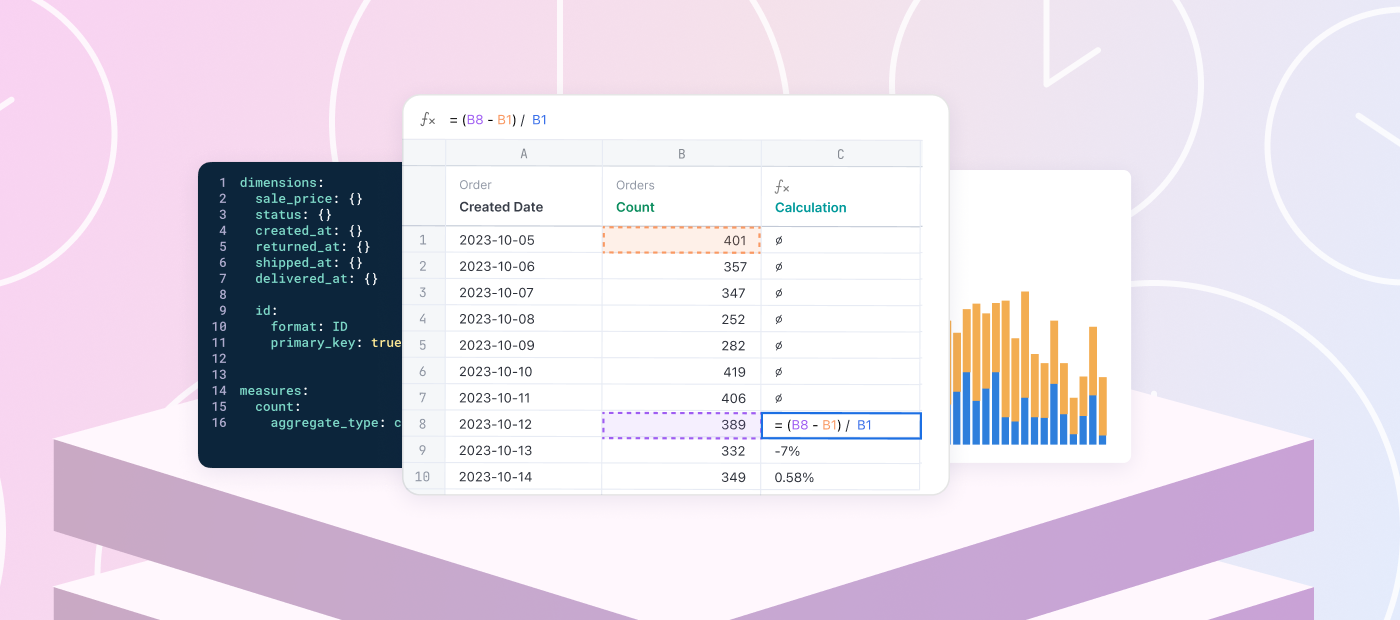

Example 2: Diagnosing a fast-changing metric #

You don’t always have the luxury of stable, unchanging data before you need to provide a directional answer to “How’s X going?” or “What’s happening with Y?”. If your business is rapidly changing, an intraday decision with incomplete data can provide more value than a fully-modeled analysis days later. For example:

You work at an e-commerce company, and your design team just rolled out a change to the order checkout process to boost purchase conversions. However, you start getting flooded with support requests to cancel orders.

To understand what's happening, you quickly spin up a new metric counting orders canceled within 15 minutes of being placed. You notice a spike in cancellations coinciding with the design team's update. Investigating further, you discover that the new "Confirm" button immediately placed an order, but users expected it to lead to a final confirmation page since that had been the previous purchase flow.

After the designers revert the change, you incorporate this cancellation metric into your shared model for regular tracking, ensuring that future changes to the checkout process don’t result in more canceled orders.

Example 3: Measuring the impact of a new sales channel #

In many cases, a business decision won’t change even if the numbers are slightly imperfect. When a fast, data-informed recommendation beats a 100% accurate answer, analyze your data now to get a directional answer and then refine your model later. For example:

It’s the end of the quarter, and your marketing leader is debating if it’s worth investing in partnerships as a lead strategy. To help them decide, you might dig through some Salesforce data to see how much new business is coming in as Direct sourced vs. Partner sourced. But alas, your SFDC data is a mess 😩. There’s no reliable identifier of “Partner leads” – just a hodge-podge of signals from various fields (“Partner” in Opportunity Name, a partner’s email domain in Lead Email, etc.).

In this case, it’s not dire to get an exact count of Partner-referred leads – you just need to know the general distribution to help make a decision. Instead of agonizing over a perfectly model-able “Is Partner sourced” field, quickly create a field in your Omni workbook that’s sufficient to get you an answer for now. Then, when you’re ready to revisit the definition, you can tidy up that logic and materialize it in your semantic layer or shared model.

Example 4: Exploring a new dataset for the first time #

You won’t always know exactly what you’re looking for, and that’s great – it keeps the job interesting! The best parts of data work are often the things you didn’t expect, like that join relationship no one knew mattered or that product idea buried deep in a table. Data is meant to be explored first – and modeling can come once you know which pieces are valuable. This can also help reduce model bloat and cloud costs (and optimize query performance)! For example:

If you’re looking into customer usage of a new feature, you might start from a fresh dataset. There’s user data, events data, and product usage data, but you’re not sure how everything fits together yet. You just want to explore a bit first.

However, sometimes raw data can be tricky to work with (e.g. it may come in JSON blobs). And even after you clean the data, it can take a lot of effort to drop down to your data model to develop metrics and reporting, especially without knowing what’s useful first.

In Omni, you can set up your data model as you explore, using point-and-click UI to clean your data, create metrics, and build a foundation to fine-tune in the IDE later. Then, once you’ve curated your data, your product team has the components they need to understand how customers are engaging with the new feature and decide what to ship next.

A new way of data modeling #

When we started discussing just-in-time data modeling, we realized it captured a familiar feeling: the desire for data modeling to feel fast, but not brittle; for it to help you do your best work, instead of becoming the work itself.

I know that feeling well. When I was in the weeds as a data analyst, I felt like I was constantly put in a tough spot – either get fast answers with ad-hoc queries, or trudge through a rigid data modeling process just to make my work reusable. It’s a challenge we help customers address every day, and it’s why our team is committed to building a better BI platform – one that speeds you up as you do your analysis and lets you easily reuse, build upon, and share that work.

We’re excited about this new data modeling paradigm, and we hope that you’ll give it a try in Omni.